Sight and sound are out of synch

Sight and sound are out of synch

Take our test to see whether your hearing and vision are out of synch: synchrophonics.city.ac.uk

When we talk to someone, we naturally expect to hear their voice at the same time as their lips move. But in the brain, hearing and vision are processed at different times, leading many researchers to assume there must be mechanisms for synchronising our perceptions. With my former doctoral student Alberta Ipser we have gathered evidence which challenges this assumption, revealing an unexpectedly profound and widespread desynchronisation of sensory timing.

Our first paper in Cortex presented the first confirmed case of a man who hears peoples’ voices before he sees their lips move. It also finds similar asynchronies in healthy control participants. See Case Study below.

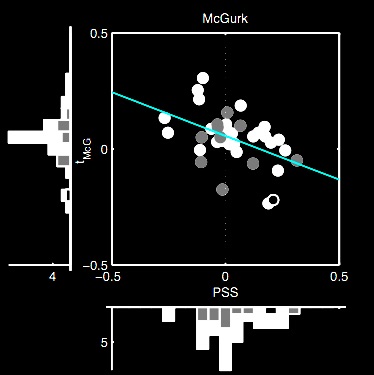

Our new paper in Scientific Reports shows that sight and sound are also out of synch in healthy normal people by different amounts for each individual. These audiovisual asynchronies are traits which remain stable over repetitions of the same task, but are different and uncorrelated for different audiovisual tasks, such as identifying spoken words or individual phonemes (see example of McGurk illusion above). This suggests that different brain networks are involved in these different tasks, and that each receives signals from vision and audition at different times.

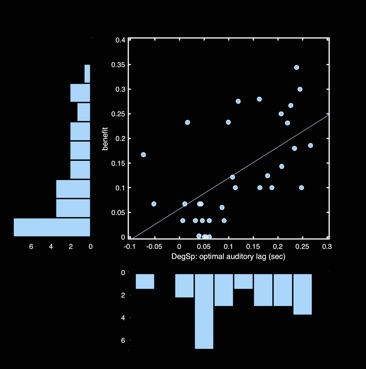

We also show that identification of words embedded in noise can be improved for some individuals by delaying sound relative to the sight of lip-movements. For half of our participants a lag of about 100ms helped them to understand 20 more words in every 100, on average (see graph below). This finding could have applied benefits. For example a hearing aid with an individually programmable delay might improve comprehension in hearing impaired individuals; individual delays might also increase comprehension of streaming video, or benefit video-based language learning; communication in video-conferencing or in noisy environments might also benefit (patent pending, see Publications).

Example of word identification task with different asynchronies; text shown for demonstration only.

The benefit of audiovisual desynchronisation for speech comprehension (vertical-axis) plotted against each individuals optimal asynchrony (horizontal axis). Half the participants correctly identify 20 more words in 100, on average, when sound is delayed by the amount that is optimal for them.

Cortex paper: Case study and normative results

In temporal order judgements (TOJ) case PH experiences simultaneity only when voices physically lag lips by about 200ms. In contrast, he requires the opposite visual lag (again of about 200ms) to experience the classic McGurk illusion (e.g. hearing ‘da’ when listening to /ba/ and watching lips say [ga], see example above), consistent with pathological auditory slowing. These delays seem to be specific to speech stimuli.

We also tested normal participants, finding some further surprises:

-

(1)ordinary people also can show similar desynchronisation to PH.

-

(2)also similar to PH, normal individuals can perceive the same events as occurring at two quite different times, when their timing is probed by two different concurrent measures.

-

(3)individual differences in one measure of subjective timing correlate negatively with the other measure. Thus some people require a small auditory lag for optimal McGurk but an auditory lead for subjective simultaneity, while others show the opposite pattern.

-

(4)Similar results are found for speech (McGurk) and non-speech (Stream-Bounce) illusions. More recently we have found this negative correlation for a variety of other speech comprehension tasks (conference poster).

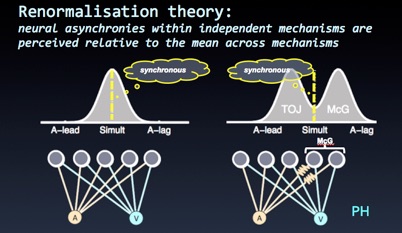

If there were synchronising mechanisms which served to unify sensory timing, we would predict at best a positive correlation between different measures of multisensory timing, not a negative correlation. Our paper proposes a new theory, temporal renormalisation, for understanding of the relationship between subjective timing of sensory events and timing in the brain. It assumes that timing within independent multisensory mechanisms is perceived relative to the average across mechanisms. This can explain all the above results.

Our continuing work is investigating correlations of individual differences in multisensory timing with higher cognitive skills such as reading, and correlations with individual differences in brain anatomy.

Here are some links to some recent conference presentation abstracts and posters:

Conference presentations and posters

Elliot Freeman, Alberta Ipser (2016) Negatively correlated individual differences in audiovisual asynchrony, AVA Christmas Meeting.

Elliot Freeman, Alberta Ipser (2012) Hearing voices then seeing lips: Fragmentation and renormalisation of subjective timing in the McGurk illusion, 9. In Seeing and perceiving 25 (Supplement 1).

Alberta Ipser, Diana Paunoiu, Elliot Freeman (2012) Telling the time with audiovisual speech and non-speech: Does the brain use multiple clocks?, 14-15. In Seeing and Perceiving 25 (Supplement 1).

Freeman, Elliot D., Ipser, Alberta. Hearing voices then seeing lips: disunity of subjective timing POSTER

Ipser, Alberta; Paunoiu, Diana, Freeman, Elliot D. Telling the time with audiovisual speech and non-speech: does the brain use multiple clocks? POSTER

PSS (x-axis) plotted against asynchrony for maximum McGurk (open circle: PH; grey: older; black: young).

Schematic illustrating renormalisation theory. Signals from auditory and visual modalities arrive at different mechanisms at different times. This results in a distribution of asynchronies (illustrated by bell-curves). These are normalised relative to the mean. The mean asynchrony is then perceived as synchronous. If one mechanism registers an auditory delay (e.g. involved in McGurk integration), then relative to the mean, other unaffected mechanisms (e.g. involved in another task such as Temporal Order Judgement) will treat same stimuli as having an auditory lead.

McGurk illusion: mismatching lips change what you hear. With eyes open you might hear ‘ba da ba da’, but with eyes closed you should hear ‘ba ba da da’ (McGurk & McDonald, 1976).

Stream-Bounce illusion: Colliding balls appear to bounce off each other when accompanied with a synchronous sound, but may appear to stream through each other when the sound is asynchronous (Sekuler et al, 1997)